Homelab prometheus & grafana dashboard

date:

tags: containers docker homelab prometheus grafana raspberry pi metrics

categories: Homelab Containers Observability

last_updated: 2023-10-01 : updated container image tags, updated proxmox exporter, replaced deprecated ansible role for node_exporter

Prometheus is an open-source systems monitoring and alerting software. Prometheus collects and stores metrics as time series data, so one dimension of the data is always based on the time that the metric was recorded. Metrics are pulled over HTTP into the prometheus system. Each data monitoring source will need a data exporter that presents the metrics on a http server that prometheus can pull from.

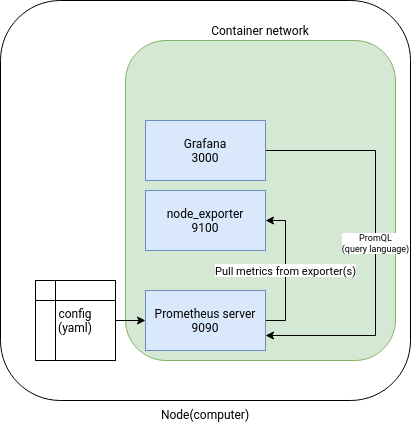

In order to create a dashboard to use to monitor homelab infrastructure, the following components are needed:

- prometheus “server”

- exporter(s) that collect metrics

- Data visualization platform (grafana)

Grafana is an open-source application that creates visualizations of time series data. We will use grafana to visualize the metrics gathered by prometheus to create a homelab dashboard.

Deploy prometheus and grafana with containers

Here is an overview of the deployment architecture:

If you are not familiar with containers, there is a previous post on how to get started and install the software needed to deploy the containers.

prometheus config

First, prepare a YAML prometheus configuration:

|

|

File: prometheus.yml

|

|

The configuration above will attempt to scrape metrics from the containers all running on the same network.

Deploy containers

The Prometheus server can be run on a container runtime, here is a compose template:

File docker-compose.yaml

|

|

This will create all containers on the same private network and avoid exposing the metrics in plain text to the local area network.

I have commented out cadvisor because they do not have an arm64 image available at this time.

I have also commented out port bindings. If you do not wish to use a reverse proxy server, simply uncomment the lines with ports: and numbers and those containers will be available on localhost ports of the system running the containers.

I will be deploying these containers to a raspberry pi that also has a reverse proxy server. This reverse proxy container is on the same container network as the prometheus containers. This allows me to expose the container with the dashboards to my local network and encrypt with TLS. For information on how to set up a similar system with docker and nginx proxy manager, check out a post on the subject.

Access grafana

I have set up a reverse proxy to access grafana. This proxy server also performs TLS encryption to ensure that traffic is encrypted in transit. See link in paragraph above to set up a proxy server. The nginx container needs to be on the same container network as the containers above.

If you do not wish to use a proxy, uncomment the grafana ports: so that port 3000 which grafana uses by default will be accessible to you. In that case, navigate to the hostname or ip of the container host and add port 3000 to the url:

http://container_host:3000/

Regardless of proxy or port forwarding, the default credentials for grafana are:

- user: admin

- password: admin

Add data source

Grafana needs to be configured to utilize prometheus as a data source.

- Navigate to the menu and select “Connections”

- you should see the menu to “Add a new Connection”

- Seek out or search for “Prometheus”

- Select the button to “Add new data source”

- Find the HTTP section and fill out the form for “Prometheus server URL”

- Enter

http://prometheus:9090into the form - Select “Save and test” at the bottom.

For Grafana versions < 10

-

Navigate to the left-hand menu and find the Gear icon, then select “Data Sources”.

-

Select “Add data source” and then find Prometheus.

-

All that is really needed is the

HTTP.URLconfigured: -

Enter

http://prometheus:9090 -

Select “Save and test” to save the configuration.

Add a dashboard

Once prometheus has been added as a data source, a dashboard can be added.

There are free dashboards available online:

https://grafana.com/grafana/dashboards/

I will add Node Exporter Full to utilize the metrics from the node_exporter container.

At this time it has the ID 1860.

-

Navigate to the left-hand menu and Select “+” > “Import” > “Import via grafana.com”

-

Enter the ID of the dashboard you would like to import and then select “Load”

-

You should now see a dashboard like below.

Bonus: add proxmox dashboard

In order to add a dashboard for proxmox, we need to create an exporter to scrape metrics from the proxmox API. We can do this by adding onto the prometheus container stack or run the exporter directly on a proxmox node.

Added NOTE after Proxmox 8 release

While you can still install the pve exporter on the proxmox node itself, in Proxmox versions 8 and greater, the system package manager highly suggests not installing python packages via pip system-wide. I recommend to deploy similar to other prometheus exporters as a separate container.

I attempted to install the exporter on a proxmox 8 node and was faced with an error:

|

|

The reason for this is that the python package manager, pip , will install files into a location that is used by the operating system which could potentially cause issues with system stability.

For this reason, I recommend to set up the proxmox prometheus exporter as a container that runs alongside prometheus.

Create a user on proxmox node

This step is necessary no matter where you install the exporter. This user is used to access the metrics from the proxmox API and export them for prometheus.

Create a non-root user to run the prometheus exporter program:

|

|

Create authentication config

Create a user in Proxmox with the Role PVEAuditor.

The official docs show how to create new users.

Create an API token by navigating to:

Datacenter > Permissions > API Tokens > Add

Save this token and add it to a YAML file that will be used by the pve exporter. This should be saved with the prometheus config file from earlier.

File pve.yml

|

|

Define a container to export proxmox metrics

Add this to the docker-compose.yaml template with prometheus and then prometheus can scrape the metrics.

|

|

Adding to prometheus config

On the prometheus server node(s), update /etc/prometheus/prometheus.yml

This is if you are using the pve-exporter in a container

|

|

This is if you installed the pve exporter directly on a node

|

|

Any updates will require prometheus to be restarted. If using a container, it would be similar to:

|

|

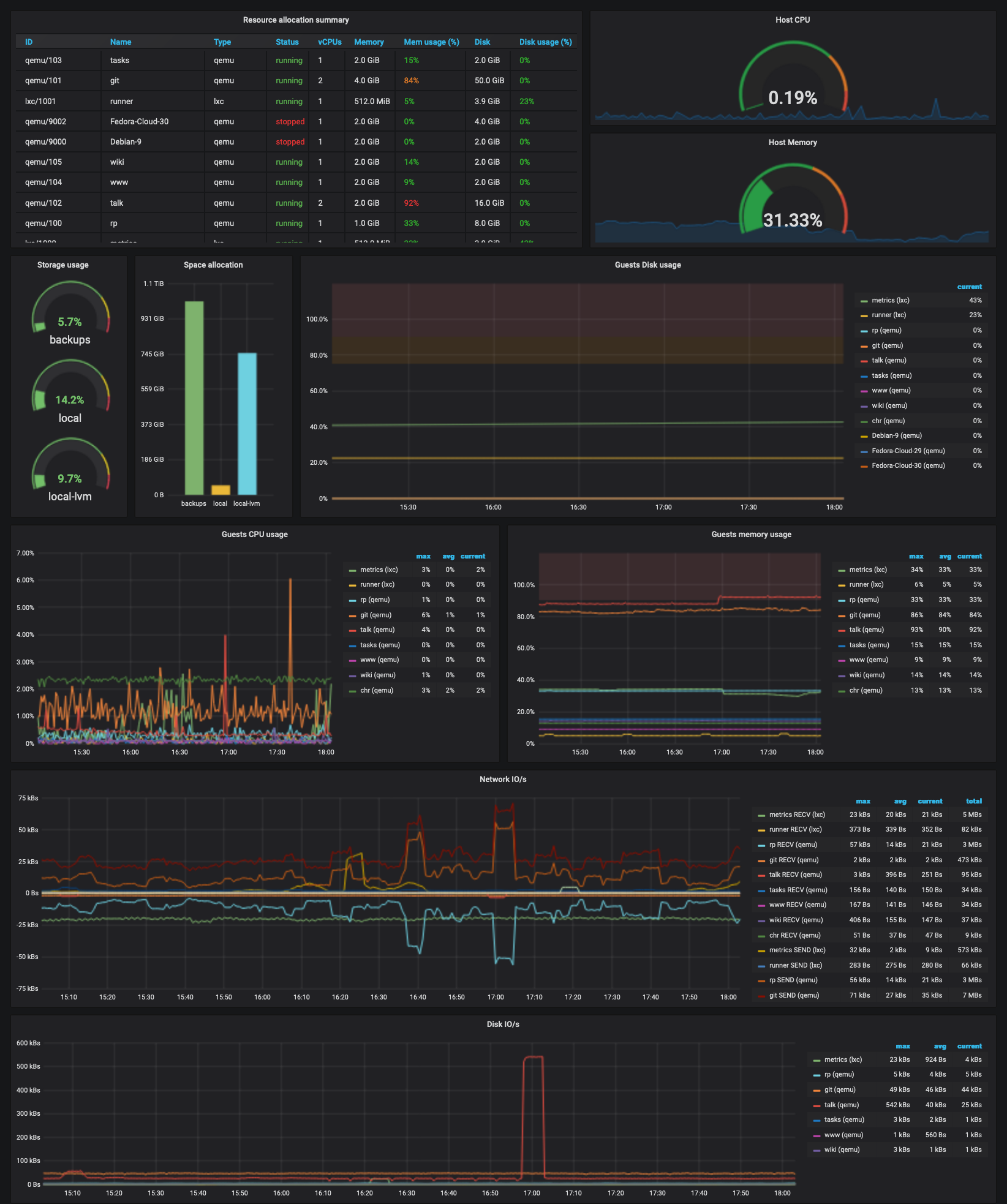

Now add a proxmox dashboard

At this time, the dashboard compatible with this exporter is ID 10347 and after importing this dashboard, it should appear similar to this once some time has passed for prometheus to collect metrics:

Install exporter on proxmox node(s)

Not recommended

Note: I recommend to skip this step and use a container instead of installing on the host.

|

|

If pip is not working, install the package from the debian repos:

|

|

Create a systemd file to run the exporter

/etc/systemd/system/prometheus-pve-exporter.service

|

|

The ExecStart will run the pve_exporter and the first parameter is the pve.yml config file. If installing on the host, the pve.yml should be on the proxmox system, in this example it was saved to /etc/prometheus/pve.yml

Now enable this service to start exporting metrics:

|

|

Ensure the status is running and not failed to proceed.

Bonus: add a windows node exporter

There is also a prometheus node exporter created by the community for windows operating system.

windows exporter installation

The latest release can be downloaded from the github releases page.

Each release provides a .msi installer. The installer will setup the windows_exporter as a windows service, as well as create an exception in the Firewall.

If the installer is run without any parameters, the exporter will run with default settings for enabled collectors, ports, etc. The following parameters are available:

| Name | Description |

|---|---|

ENABLED_COLLECTORS |

As the --collectors.enabled flag, provide a comma-separated list of enabled collectors |

LISTEN_ADDR |

The IP address to bind to. Defaults to 0.0.0.0 |

LISTEN_PORT |

The port to bind to. Defaults to 9182. |

METRICS_PATH |

The path at which to serve metrics. Defaults to /metrics |

TEXTFILE_DIR |

As the --collector.textfile.directory flag, provide a directory to read text files with metrics from |

REMOTE_ADDR |

Allows setting comma separated remote IP addresses for the Windows Firewall exception (whitelist). Defaults to an empty string (any remote address). |

EXTRA_FLAGS |

Allows passing full CLI flags. Defaults to an empty string. |

Parameters are sent to the installer via msiexec.

|

|

After the exporter is started, verify it is running. Visit http://localhost:9182 in a browser on the windows system and see if you can browse to /metrics.

From the system where prometheus is running, check that you can access the metrics with curl. Replace with proper hostname or IP.

|

|

Adding to prometheus config

On the prometheus server node(s), update /etc/prometheus/prometheus.yml

|

|

Any updates will require prometheus to be restarted. If using a container, it would be similar to:

|

|

Add a windows dashboard

One dashboard compatible with this exporter is ID 14510 and after importing this dashboard, it should appear similar to this once some time has passed for prometheus to collect metrics:

Next steps

This is really just scratching the surface of what is possible. All of these dashboards are made by others in the community. PromQL can be used to construct custom dashboards. I would recommend adding a prometheus node exporter to all of the computers that are part of your lab. In my case I now can monitor all proxmox hosts, raspberry pi(s), and windows computers. The other side of system monitoring is logging and that is a topic for another day.

Deploying prometheus node exporter with ansible

There is a handy ansible collection published by the community that will download the prometheus node exporter to a linux system and install a system service to keep the exporter running. If you are not familiar with ansible, see a previous post to get started. I recommend implementing a firewall to ensure that only the prometheus server host(s) are able to connect to the node exporter port 9100.

To use community playbook:

|

|

Create a playbook:

|

|

Run this against your hosts:

|

|

-K prompts for sudo password, this is optional if you have sudo configured to escalate without password prompt.