Terraform for homelab

date:

tags: proxmox homelab virtual machine containers terraform infrastructure as code

categories: Proxmox Virtual Machines Containers

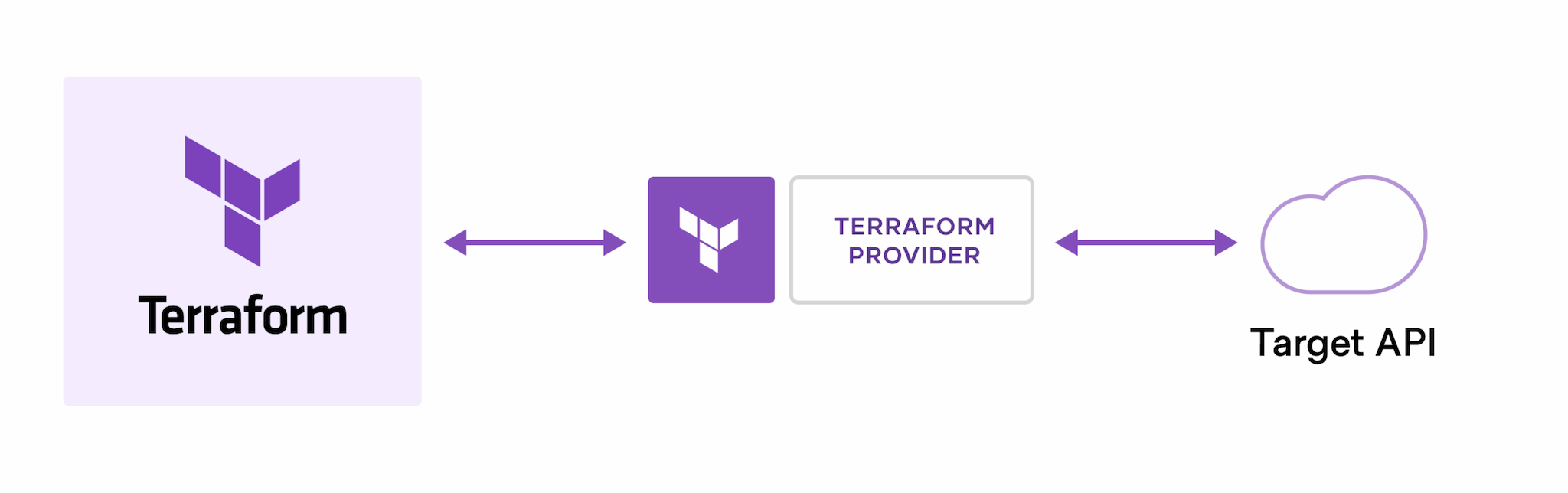

Terraform is a tool for orchestrating infrastructure as code with human-readable configuration files. It can be used to create objects in the cloud and in the homelab. Similar to ansible, terraform abstracts various other APIs used to provision virtual machines, containers, or an entire public cloud ecosystem.

Terraform has an active community that contributes “providers” that interface with various resources and services. For example I will be switching my entire lab to using terraform. There are existing providers for the platforms that I use: Amazon Web Services, Docker, Kubernetes, github, proxmox, and likely more that I have yet to find.

The work-flow for terraform at the most basic level is three stages:

- Define resources in a configuration file.

- Use terraform program to create a

planwhich shows what actions terraform will perform. Terraform will either create, update, or destroy resources. - Use terraform program to

applyyour plan to the target API. The best part about this is for example you are creating a virtual machine in the cloud, but terraform will make sure to create network configurations before creating the virtual machine. Providers can include dependency logic for resources.

Terraform is now very popular in the software industry and is something that I get paid to write. In this post I will go over all the providers that I use in my homelab. This was a real game changer for managing my homelab. No more installation wizards!!!

Installation

The good news is that terraform is cross-platform and can run as a single binary executable file. You can download the compiled binary and directly run it on linux, macos, and windows systems. There are also package repositories for installing terraform on each of these platforms.

Manual install

On any platform, you can download the terraform binary and run it in a terminal.

https://www.terraform.io/downloads

macOS

On macOS, I use homebrew to install and update software. Terraform can be installed by adding a tap.

|

|

Windows

On windows, I suggest the chocolatey package manager. Check out a post on setting up windows if you are not familiar with chocolatey.

Install with chocolatey:

choco install terraform

Linux

The publishers of terraform have package repositories for the following distributions: Debian/Ubuntu, CentOS/RHEL, and Fedora. Check out the download link above for up to date commands to add terraform repo to your distro.

Here is an example to install the hashicorp repo on fedora:

|

|

Also note that this repo includes other hashicorp tools such as packer.

Examples

Here are some examples of how I am using terraform demonstrating how to get started.

Verify that terraform works by running this in a terminal:

|

|

Docker

Terraform can be used to manage docker containers. If you are not familiar with containers, check out a previous post for the basics and how to get started. My previous posts include lots of applications that are deployed as containers. Those typically have been managed with docker-compose templates in yml format.

Terraform provides another way to manage containers. The reason to use terraform instead of docker-compose could be to unify your infrastructure as code. Rather than have a mess of shell scripts, .yml files, or relying on a gui, terraform can deploy containers and provide a source of truth for the state of your infrastructure.

For this first example, we can create a single terraform template file to start a simple web server container. In the file we must reference the provider that defines docker related resources.

|

|

That is a very basic example that is equivalent of running:

|

|

Once the file is created, the terraform init command is needed to download the configured docker provider.

|

|

You should see ‘Terraform has been successfully initialized’ in the output.

Terraform can also format your resource files to clean up white space and indentation:

|

|

Terraform can validate the syntax of the files as well:

|

|

And finally we tell terraform to apply the configuration. This command will create resources or verify that they are already running as configured.

|

|

Before terraform makes changes, the user is prompted with a summary of what changes terraform will make or it will inform the user that everything is up to date.

|

|

The output should show ‘Apply complete!’

Verify the container is running with a docker command:

|

|

After you apply the terraform configuration, a new file is generated in the working directory called terraform.tfstate this is a text file that includes metadata about the resources that you have created. This file is used by terraform to determine if new changes are needed. This file can be inspected in a text editor or by running:

|

|

Proxmox

Now for what excited me most, the proxmox provider. The proxmox provider can be used to create and manage virtual machines and lxc containers on proxmox systems. If you have a cluster of proxmox nodes, terraform can deploy to any of the nodes. If you are not familiar with proxmox and virtual machines, check out a previous post on how to set it up.

Since I already have resources deployed to proxmox, the terraform import function can be used to allow terraform to manage existing resources.

Unlike the docker provider, the proxmox provider needs credentials to access the proxmox API. I recommend using an API token and avoid putting passwords in terraform templates.

In order to better protect credentials, terraform variables can be used to keep credentials out of template files.

Create service account for terraform

Log into the proxmox host terminal or GUI and then:

- Create a new

rolefor terraform - Create a new

userfor terraform - Bind the new user to the new role

- Create an API key and associate it with the new user

|

|

Here is a terraform file to manage an existing proxmox lxc container.

|

|

Once the variables have been declared, you can reference them during execution or in a separate file. For example in a separate file:

vars.tfvars

|

|

Environment variables can also be used instead of storing the credentials in a file:

|

|

Once the terraform template and variables file is ready, initialize the provider:

|

|

|

|

Import existing lxc container

The example terraform template above was for an existing lxc container. To import it to be managed by terraform, run the following:

|

|

Replace node with the name of the proxmox server and replace id with the ID number given to the lxc container by the proxmox server.

|

|

Create a new virtual machine with cloud-init

Proxmox supports cloud-init which makes cloning virtual machines easier. Cloud-init reads configuration data when the virtual machine boots for the first time. Proxmox can pass in enough data to get a working system without you having to step through an installation wizard. It also enables you to deploy many virtual machines at the same time.

Proxmox out of the box can configure the following options with cloud-init:

- User

- Password

- ssh keys

- DNS

- Static IP or DHCP

When using cloud init, the virtual machine will have a hostname that matches the name given to proxmox as well.

Previously I have used packer to create virtual machine templates but debian and ubuntu linux publish ready to use vm templates that are already set up with cloud-init. Let’s download an ubuntu template and make a small tweak to enable the qemu-guest-agent which will allow terraform to identify networking information when using DHCP to assign an IP address.

Download ubuntu cloud image

Log into the proxmox host. If you have a cluster of proxmox nodes, I recommend using shared storage so they can all utilize the template easily. If you have a shared directory mounted, head there, otherwise going to /tmp is a good option.

|

|

This will download the latest image for ubuntu 20.04 (focal) into the current directory.

Now install a package on the proxmox host to tweak this image to support the qemu guest agent:

|

|

Now we can import this image into proxmox as a vm template:

Create a vm template using id 999 or another unique number to your proxmox:

|

|

Import the cloud-init image as the new template’s boot disk. Make sure to replace local-lvm with your proxmox storage if you are not using the default storage.

|

|

Now this template is ready to be used by terraform.

Deploy a virtual machine with terraform

Use the same terraform template as earlier in this post but replace proxmox_lxc block with a new block:

|

|

A couple things to note:

countmakes it possible to deploy multiple vms in parallel. With thecount.index, we can add a suffix to the vm hostname and give each vm a static IP address.- Make sure to define a public ssh key for the variable

ssh_keyor there will be no way to access the new virtual machine(s). Alternatively, define a password for the default user,ubuntu, if using the focal template:cipassword = "password". ipconfig0in this example will set a static IP address and make sure thegw=matches the IP of your router/gateway. To use dhcp, replace the string with:

ipconfig=dhcp

Deploy the template

Initialize the provider with terraform init.

Deploy the template with terraform apply:

|

|

Once you enter ‘yes’, terraform will create the vm. When it completes you should see:

|

|

Now the virtual machine should be remotely accesible and if you cannot login with the hostname, use the IP in the output.

Next steps

Additional configuration options are included with the providers, check out the documentation:

- https://registry.terraform.io/providers/kreuzwerker/docker/latest/docs

- https://registry.terraform.io/providers/Telmate/proxmox/latest/docs

Terraform has made it easier for me to create vms to test new software and also makes it easy to clean up after testing is complete.

Clean up

Any resources deployed with terraform can be removed with the command:

|

|